One year of scraping real estate data

Why do this project?

I wanted to work with real-world data and I have a interest in the real estate market. I noticed that a real estate agency from Bogotá, Colombia (where I lived until 2023) offered high-quality data and images with minimal access restrictions.

What made this project particularly compelling was the opportunity to ‘augment’ the website’s information by collecting data hourly. Adding this time dimension revealed valuable insights that weren’t originally available. For example:

- Price history tracking: The agency doesn’t display price histories, but by collecting and comparing with previous versions, I can identify when and by how much prices have changed.

- Market duration analysis: I can determine how long properties typically remain available before being sold.

- Re-listing detection: I can identify properties that were previously listed and have returned to the market.

- Neighborhood trend analysis: I can spot activity patterns around certain neighborhoods or market segments.

The project offers additional benefits across several categories:

- Data transformation: Converting currencies or units of area (from Colombian pesos and square meters to other preferred units)

- Advanced querying: Running custom queries involving geospatial data

- Automation: Setting up personalized alerts for price changes or activity in specific neighborhoods or market segments, eliminating the need to constantly check the website manually

How was it done?

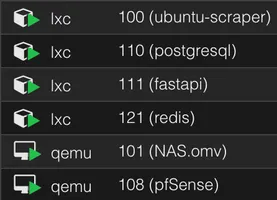

This project began as an exploration into self-hosting services and operating a data-collection pipeline. For hardware, I deployed an Intel N100 mini PC as a server running Proxmox as the operating system. This hosts:

- Linux containers (LXCs): PostgreSQL, Redis, a server running scraping scripts, and another one for the backend (FastAPI).

- Virtual machines: a virtual router (pfSense with openVPN), and a Network Attached Storage (NAS).

- Shared storage pool: manages resources across services, including stored image assets and backups.

N100 mini-PC running Proxmox

Linux containers and virtual machines

I started this project while at Recurse Center in 2024. Data collection began on January 8th, 2024, when I first ran the scraper script.

The scraping pipeline

Scraping begins with a Python script that queries the real estate agency’s website. The process happens in several stages:

-

Data Collection: The scraper connects to the public API and fetches property data from Bogotá, including property details, prices, and image identifiers.

-

Image Processing: For each listing, the scraper extracts photo identifiers and generates CloudFront URLs for downloading. An image downloader script handles the actual downloading, with robust error handling that marks images as “permanently unavailable” after multiple failed attempts.

-

Thumbnail Generation: After images are downloaded, another script processes them by:

- Converting images to the WebP format for better compression and quality

- Creating thumbnails for faster loading

- Uploading both full-sized images (converted to WebP) and thumbnails to S3 storage

-

Database Management: The scraper maintains records in PostgreSQL, tracking:

- Listing details and price history

- Neighborhood information

- Photo downloads and processing status

- Relationships between listings and photos

This diagram represents the database tables and relationships:

The entire pipeline runs on an hourly schedule, detecting new listings, price changes, and deactivating listings that are no longer available.

Dual Database Architecture

I decided to implement a dual database architecture. The system uses:

- Local PostgreSQL: For fast, reliable access during scraping operations

- Neon (PostgreSQL in the cloud): For backup and to provide data to the public-facing API

A dual database connection utility handles synchronization between these databases, ensuring data consistency while maintaining performance. This setup provides redundancy and separates the data collection from the public access layer.

Image Handling

The image handling process evolved significantly over time:

- Initial Version: Downloaded all images and stored them locally on the NAS

- Optimization Phase: Added WebP conversion, parallel processing, and error handling

- Cloud Integration: Now uploading thumbnails to S3 for more scalable storage and delivery

The current setup uses S3 as the storage backend for thumbnails, with full-size images still stored locally. This hybrid approach balances cost with performance and reliability.

Challenges

Dealing with Image Downloads at Scale

One of the biggest challenges was efficient image handling. The scraper needed to download, process, and store thousands of high-resolution property photos. I encountered several issues:

- Rate limiting: Too many rapid requests would trigger API protections

- Corrupt downloads: Some image downloads would fail or result in corrupted files. In some cases, it happened because the “images” were actually PDF files uploaded by mistake

- Resource consumption: Processing large images consumed significant CPU and memory

To address these challenges, I implemented:

- Connection pooling and retry logic with exponential backoff

- Image verification before processing

- Resource limits for ImageMagick to prevent memory issues

- A tracking system for failed downloads to avoid repeated attempts on problematic images

Database Synchronization

Maintaining two synchronized databases (local PostgreSQL and Neon cloud) proved challenging. I developed a custom dual database connection class that handles:

- Transaction management across both databases

- Fallback mechanisms when one database is unavailable

- Efficient batch operations to minimize network overhead

Exploring the data

Please visit bogota.fyi for insights about the data that was scraped in 2024 and to learn more about the project.